vmware esxi 显卡直通模式验证及nvidia驱动安装

背景

物理机器有显卡L20 (4 * 48G),安装vmware esxi系统,希望虚拟机ubuntu22.04 直通物理显卡。

- ESXi-8.0U3e-24677879-standard

- ubuntu22.04

直通模式配置

虚拟机配置

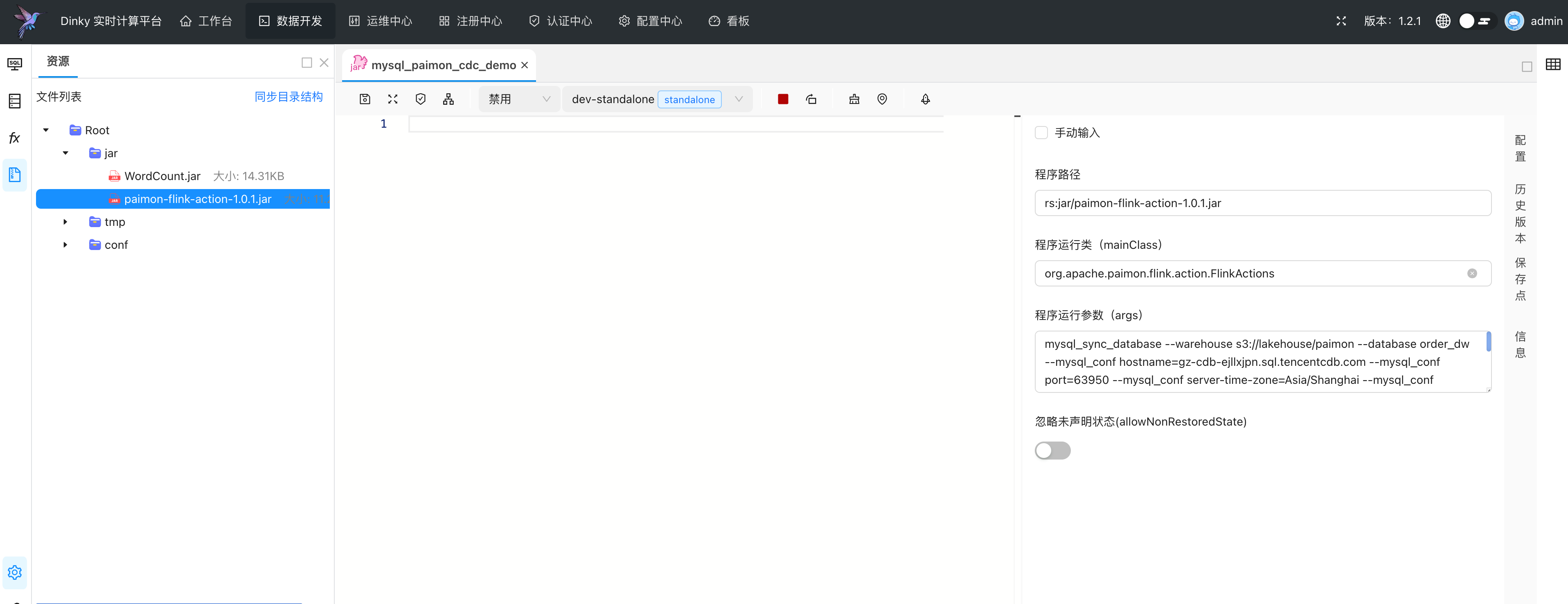

- 创建虚拟机及配置参数

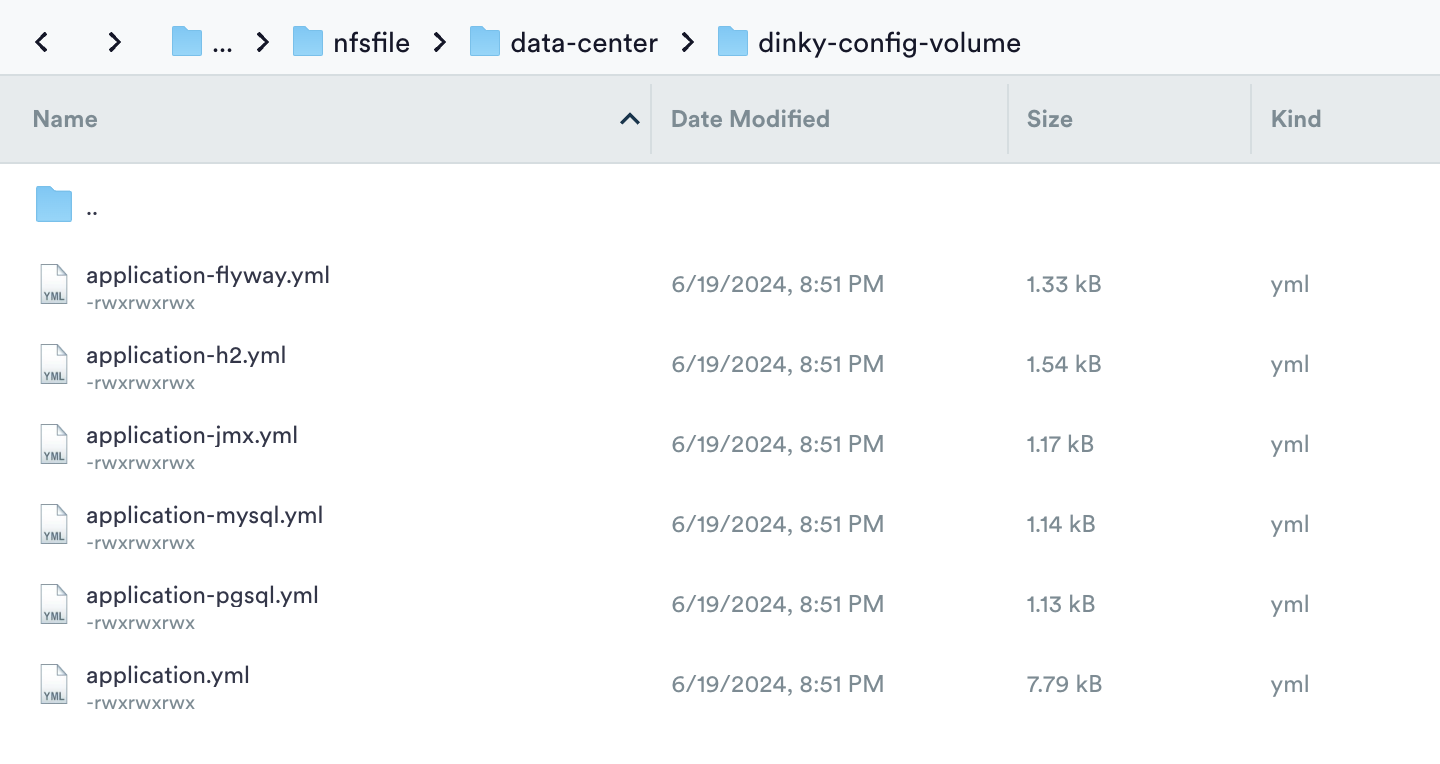

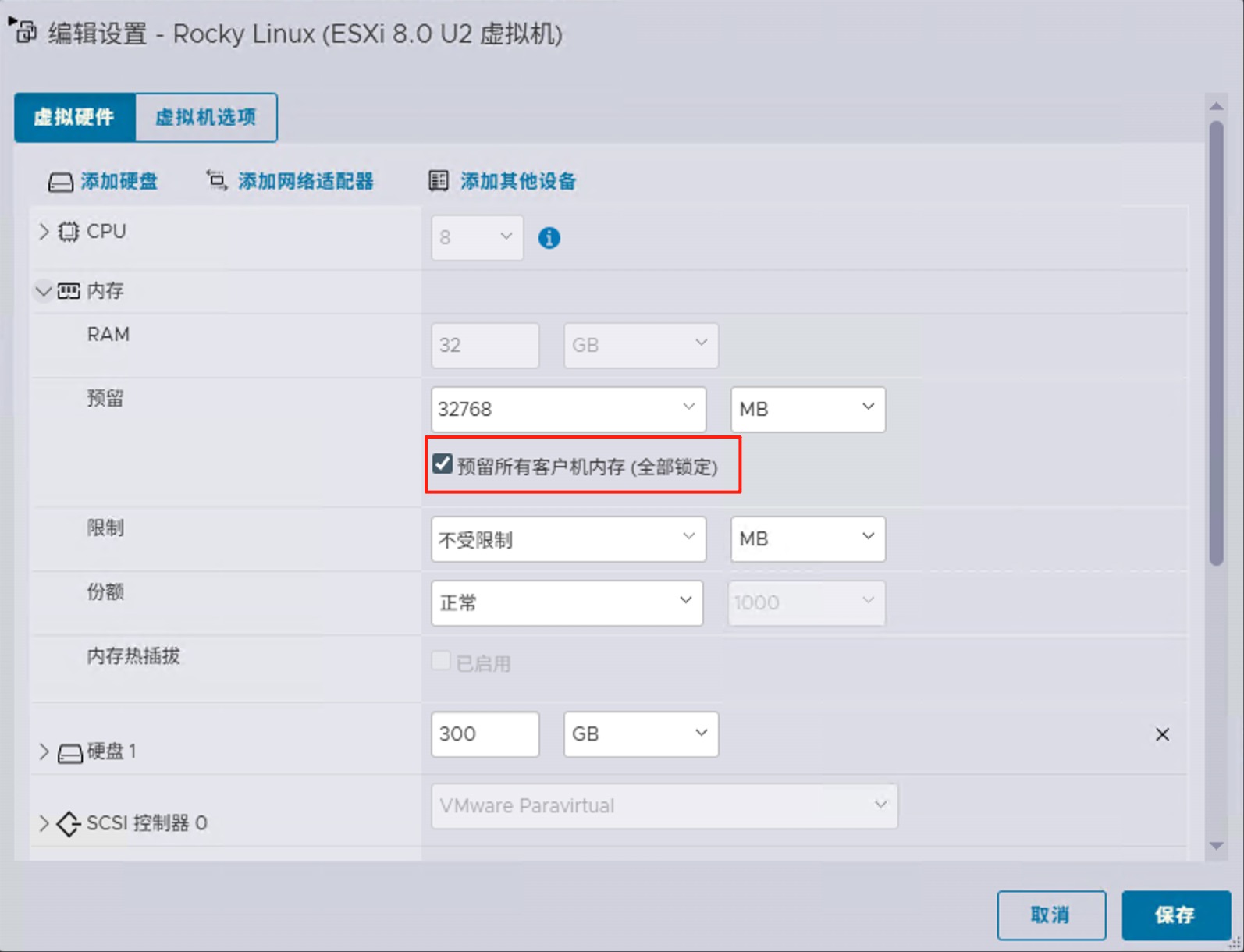

- 预留全部内存

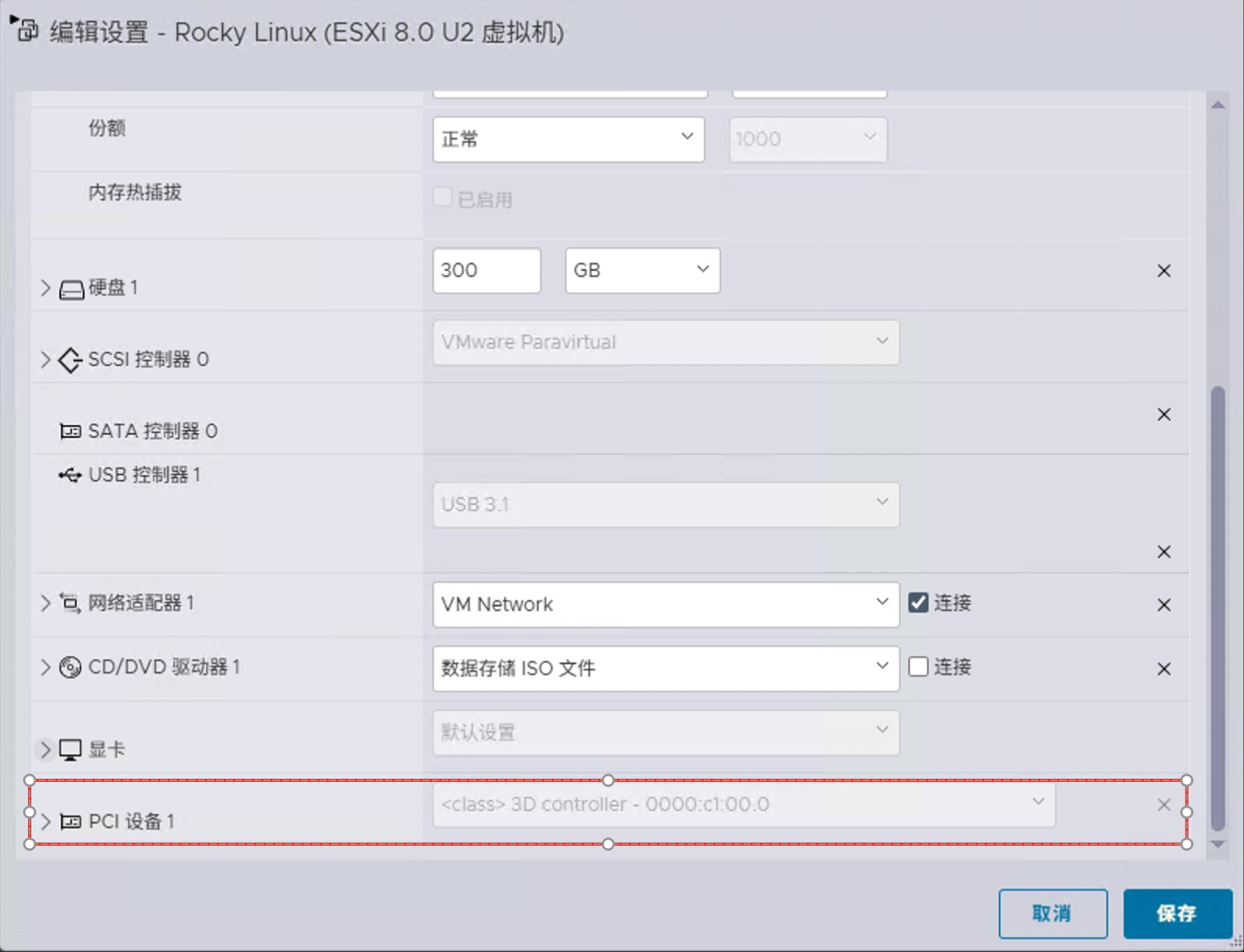

- 在“虚拟机设置”中,点击“添加其他设备” > “PCI设备”,在列表中找到并勾选NVIDIA显卡(例如,0000:c1:00.0)。

- 取消UEFI安全启动

- 预留全部内存

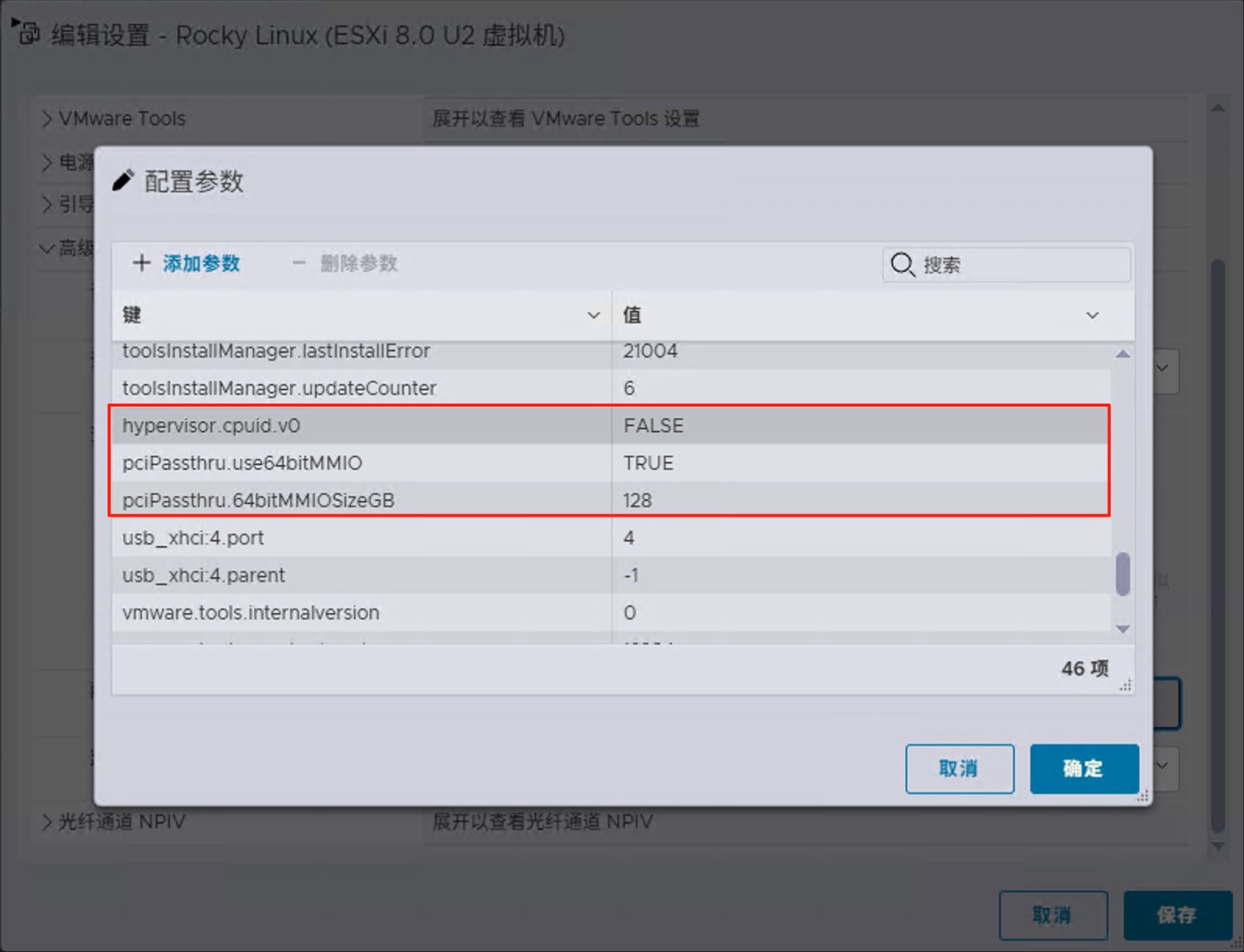

为了优化GPU直通的性能和兼容性,需要在虚拟机的高级配置中添加以下参数:

1 | 该参数用于隐藏虚拟化环境,使得虚拟机能够更好地识别并利用NVIDIA显卡。 |

pciPassthru.64bitMMIOSizeGB计算方式 https://earlruby.org/tag/use64bitmmio/

64bitMMIOSizeGB值的计算方法是将连接到VM的所有GPU上的显存总量(GB)相加。如果总显存为2的幂次方,则将pciPassthru.64bitMMIOSizeGB设置为下一个2的幂次方即可。

如果总显存介于2的2次方之间,则向上舍入到下一个2的幂次方,然后再次向上舍入。

2的幂数是2、4、8、16、32、64、128、256、512、1024…

例如虚拟机直通两张24G显存的显卡,则64bitMMIOSizeGB应设置为128。计算方式为242=48,在32和64之间,先舍入到64,再次舍入到128

例如虚拟机直通两张48G显存的显卡,则64bitMMIOSizeGB应设置为256。计算方式为482=96,在64和128之间,先舍入到128,再次舍入到256

linux 驱动安装

- 更新系统

1

sudo apt update && sudo apt upgrade -y

- 禁用开源驱动 Nouveau保存后执行:

1

2

3

4sudo nano /etc/modprobe.d/blacklist.conf

# 在文件末尾添加以下内容:

blacklist nouveau

options nouveau modeset=0重启后验证是否禁用成功(无输出即成功):1

2sudo update-initramfs -u

sudo reboot1

lsmod | grep nouveau

- 安装驱动

- 安装显卡驱动工具

1

sudo apt-get install nvidia-cuda-toolkit

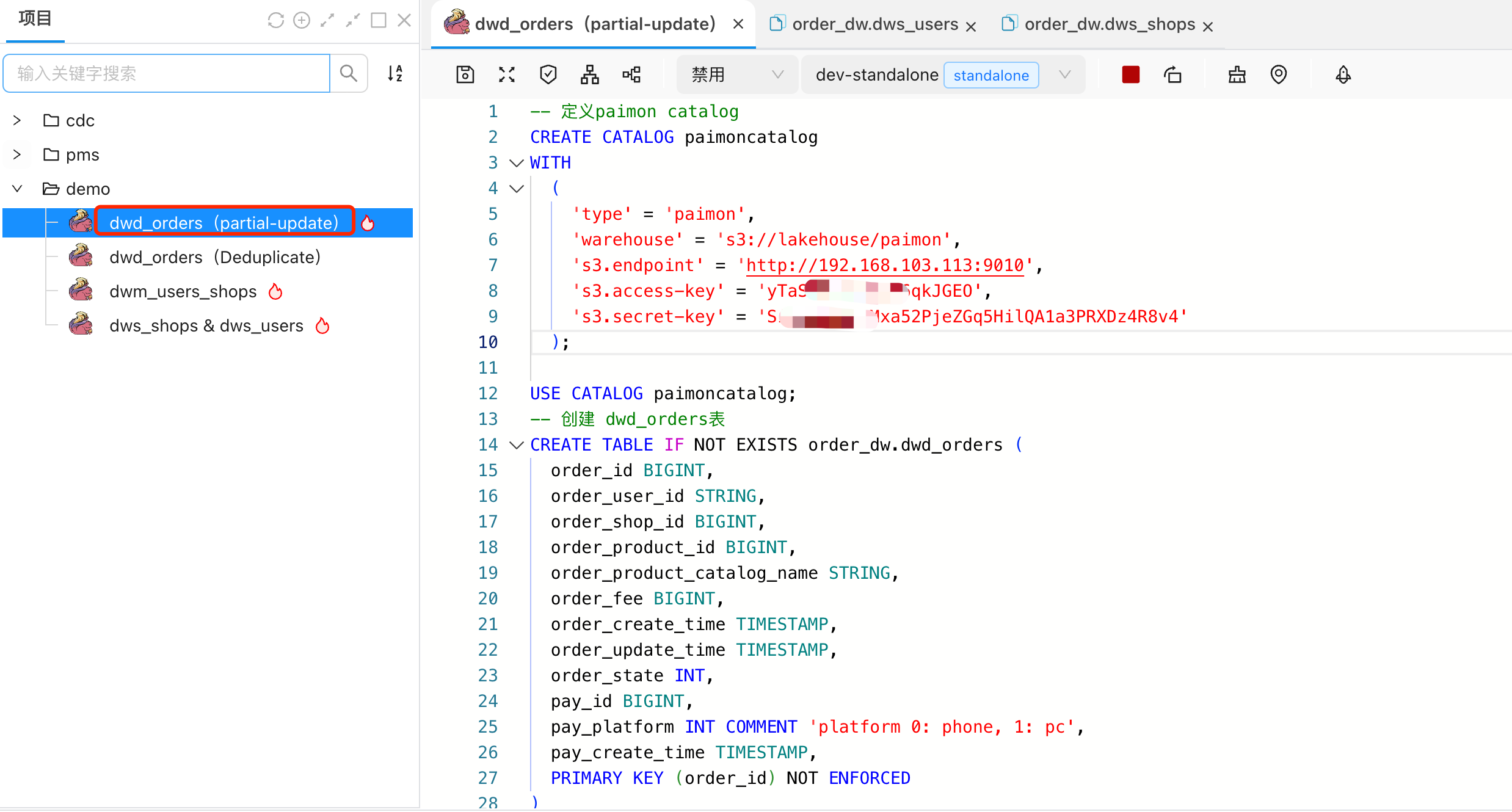

- 查看系统推荐显卡驱动

这里要看好哪个是系统推荐的驱动(recommend),并且要记下来

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28sudo ubuntu-drivers devices

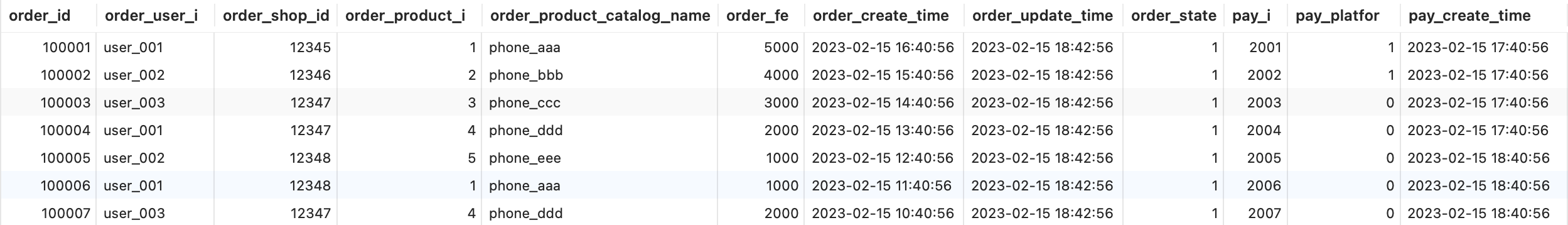

(base) root@ai-03-deploy:~# sudo ubuntu-drivers devices

== /sys/devices/pci0000:00/0000:00:0f.0 ==

modalias : pci:v000015ADd00000405sv000015ADsd00000405bc03sc00i00

vendor : VMware

model : SVGA II Adapter

manual_install: True

driver : open-vm-tools-desktop - distro free

== /sys/devices/pci0000:03/0000:03:00.0 ==

modalias : pci:v000010DEd000026BAsv000010DEsd00001957bc03sc02i00

vendor : NVIDIA Corporation

driver : nvidia-driver-535 - distro non-free

driver : nvidia-driver-575-server-open - distro non-free

driver : nvidia-driver-550-open - distro non-free

driver : nvidia-driver-575-open - distro non-free

driver : nvidia-driver-570 - distro non-free

driver : nvidia-driver-550 - distro non-free

driver : nvidia-driver-570-server-open - distro non-free

driver : nvidia-driver-570-open - distro non-free

driver : nvidia-driver-575 - distro non-free recommended

driver : nvidia-driver-575-server - distro non-free

driver : nvidia-driver-535-open - distro non-free

driver : nvidia-driver-535-server-open - distro non-free

driver : nvidia-driver-570-server - distro non-free

driver : nvidia-driver-535-server - distro non-free

driver : xserver-xorg-video-nouveau - distro free builtin - 添加驱动源

1

2sudo add-apt-repository ppa:graphics-drivers/ppa

sudo apt-get update - 选择版本安装

1

sudo apt install nvidia-driver-575

1

sudo reboot

- 安装显卡驱动工具

- 验证安装

1

2nvidia-smi

nvitop

参考链接

从坑中爬起:ESXi 8.0直通NVIDIA显卡的血泪经验

How To Install Nvidia Drivers on Rocky Linux 10

vmware虚拟机玩GPU显卡直通